Why does technology always have to create new words, new meanings for old words or new meanings for new words? The term AI is used everywhere. But AI is such a broad field, that we should be more specific. In this post, I hope to shed some light on all the different words that are particularly relevant to today’s breakthroughs.

Your Handy Dictionary

Like me, you’ll probably need to regularly remind yourself of these definitions. There are many, and this list will grow continuously. I have summarised all of the keywords in this table. A helpful reminder if you are using this as a quick reference. For more details, jump into the next section!

| AI (Artificial Intelligence) | AI is the topic within computer science which enables machines to have intelligence |

| AGI (Artificial General Intelligence) | AGI is the idea that an AI system possesses the same level of intelligence and reasoning as a human. |

| ML (Machine Learning) | Machine Learning is a large sub-category of AI. It refers to models which are build to solve a task using a learning algorithm and a large collection of real-world data. |

| Neural Networks | Neural networks are a type of model which involve layers of interconnected software ‘neurons’. |

| Deep Learning | Deep Learning applies a learning algorithm over very large datasets to train a neural network. |

| RL (Reinforcement Learning) | Reinforcement Learning is a type of Machine Learning which trains a model using interactions, and feedback, from an environment. |

| GenAI (Generative AI) | Generative AI is a type of AI which generative content, such as text, audio and image using a model. |

| LLM (Large Language Models) | Large language models are large neural networks which accept text input and respond with text output. |

| Reasoning Models | Reasoning models are LLMs which have been explicitly tuned to pause for thinking time before answering, aiming to create models that are better at reasoning. |

| Emergent Behaviour | Emergence is the phenomenon in AI which refers to the discovery of a new task that a model can complete which hasn’t been intentionally included in the training process. |

| Agents | An agent is a type of AI, which not only makes decisions, but will execute actions based upon that decision. |

Let’s Dive in

AI (Artificial Intelligence)

Let’s start at the top. In simple terms, AI is the topic within computer science which enables machines to have intelligence. AI is the scientific field of computational intelligence, much like medicine is the scientific field of human health. Experts in AI are not necessarily experts in every part of AI. Much like doctors aren’t masters of every part of medicine.

Here’s the full extract from the Wikipedia definition for AI:

Artificial intelligence (AI), in its broadest sense, is intelligence exhibited by machines, particularly computer systems. It is a field of research in computer science that develops and studies methods and software that enable machines to perceive their environment and use learning and intelligence to take actions that maximise their chances of achieving defined goals.

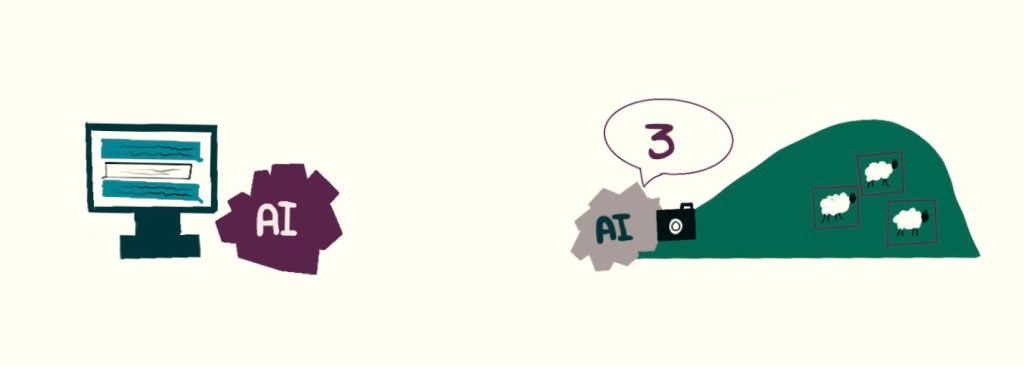

What do we mean by intelligence, and what do we mean by methods and software? It can get very complicated, and highly dependent on the application. But in my eyes, intelligence should be described in terms of a task. A machine is intelligent at a task if it can observe the world, and come up with a decision for that task, without input from a human. The extent and scope of the task and the decisions it can make are intertwined so heavily with an application that it is hard to give more detail without an example.

For example, you could make a ‘Sheep Identifier’ AI which is intelligent at identifying sheep in a field. Given a picture of a field, and a task of finding the sheep in the field, this ‘Sheep Identifier’ AI picks out all your sheep in the field – without your assistance.

Or you could create a Customer Service AI which answers questions in a web chat about the offerings of a company. This AI would be intelligent at answering questions from customers of a company.

Both are AI’s, but the Customer Service AI would not be able to identify sheep in a field. Nor could the Sheep Identifier AI answer customer service questions. As models are becoming more capable, the number of tasks they can complete is increasing. You can have both a Sheep Identifier AI and a Customer Service AI in one now!

AGI (Artificial General Intelligence)

For some, AGI is the ultimate goal of AI research. AGI is the idea that an AI system possesses the same level of intelligence and reasoning as a human. It might even have a more advanced level. It is debatable whether this is hypothetical, or upcoming. It is also debatable what human-level intelligence means. Sam Altman (CEO of OpenAI) believes in AGI and is actively seeking AGI for the benefit of humanity. AGI as a topic can be quite divisive – with some getting worried about the impacts of AGI on the world. As of 2025, we have not reached AGI, we still have a long way to go!

ML (Machine Learning)

Machine Learning is a large sub-category of AI. It refers to models which are built to solve a task using a learning algorithm and a large collection of real-world data. A model is initialised, and during learning (the build process), it is shown examples from the real world. Based on how well the model performs in these examples, the parameters of the model are updated. Essentially the model teaches itself how to do better, by experience!

Machine Learning itself can be broken down into subcategories depending on the type of AI model that is being built (supervised, unsupervised). I’m going to leave a lot of these categories out for now, as the topic is huge – and not all relevant to today’s advances. But just know, a lot of AI models, are built using machine learning.

Neural Networks

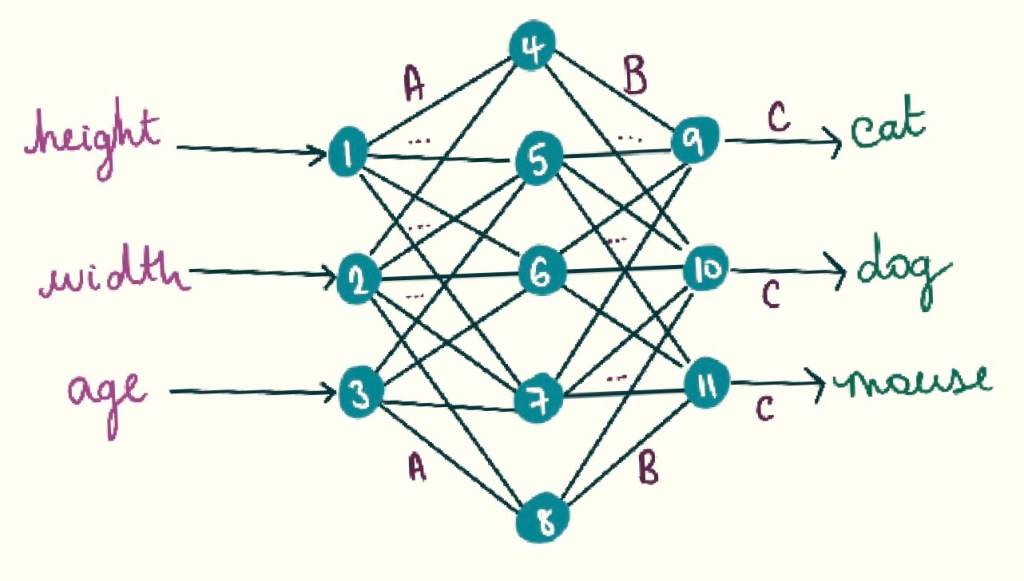

One of the most important terms of AI. We have already used the vague term ‘model’ several times. Well, a model is the bit of software which is doing the decision-making. One of the most pivotal types of model in Machine Learning is the Neural Network.

Neural networks are a type of model which involves layers of interconnected software ‘neurons’. The software neurons are inspired by the human brain. They are represented in a computer as parameters and functions. This combination of parameter and function is where the intelligence is contained. During learning, the model parameters are slowly tuned to get better and better at the task.

Essentially, a model is a large collection of numbers, connected with mathematical functions (like adding, multiplication, the logarithm). It is very very hard, as a human, to try to understand the model by only looking at its parameters and functions. It is the interconnectedness and scale of the model, combined with input data where the intelligence is revealed.

Newer neural networks are HUGE. DeepSeek-v3 has 635 billion parameters. In full precision, where one parameter is expressed as several 32 bits (4 bytes), we would need 2540GB of memory to simply load this model. For context, if you have brought the top-of-the-range Apple MacBook Pro M4 Max – you have around 48GB of memory. You would be able to load about 2% of the model! This is why supercomputers and optimised memory units (GPUs) are so important – more on this in a separate post.

Deep Learning

A very important sub-field of Machine Learning is Deep Learning. Arguably Deep Learning is the reason why AI has reached the level of intelligence that it has. Deep Learning uses neural networks and applies a learning algorithm on very large datasets to iteratively improve the AI model parameters.

Deep Learning models typically require large amounts of training data, lots of compute and GPUs, and lots of energy to run.

To give you an idea of the scale of training these very large, very deep models. ChatGPT v3, released in 2020 (a nearly 1.8Bn parameter Deep Learning Language Model) is estimated to have been trained on 500 billion tokens over a cluster of 1,024 GPUs. There are comments online stating that GPT-3 would have taken 355 years to train on a single GPU.

RL (Reinforcement Learning)

RL is also a type of Machine Learning. Rather than using a dataset to learn correct decision-making. It learns directly by interacting with an environment. The RL model makes an observation and then decides on an outcome. The outcome is executed in the training environment, and the results in the environment are observed by the model. Whether an action is good or bad is determined by a reward function. Good actions are encouraged by the model receiving a positive reward, whereas bad actions are penalised. It is the actions and reward pairs which influence the training of an RL model.

RL has been around for a while. It has been popular in the past, with the attention received by AlphaGo. AlphaGo is an RL agent that beat the world champion in the complex game of Go. You might be re-hearing the term, RL, these days in conjunction with LLMs. RL is now being applied to the final build step of LLMs to improve their alignment with human judgment. RL is particularly used to encourage reasoning. I would check out the DeepSeeks-R1 paper for more info.

GenAI (Generative AI)

This is the buzzword at the moment, you surely have come across it! GenAI – refers to any Machine Learning approach which is tasked with generating content. For example, generating text from an input prompt or generating images based on a description. This differs from ‘traditional’ Machine Learning tasks which classify inputs into one of a fixed set of outputs. Large Language Models are a type of Generative AI algorithm, as are many of the image generation tools (DALL-E)

Diffusion models are another type of Generative Model which you might come across. They differ slightly from neural networks, Diffusion models learn to noise and then de-noise inputs to generate new content in a similar style. You can read more here!

Large Language Models

Large Language Models have been central to the AI boom of the 2020s. An LLM is a deep-learning neural network which is specifically designed to work with natural language. LLMs can answer questions, they can create content, can reason over scenarios and can even write software.

ChatGPT, Claude, DeepSeek and many other modern commercial AI tools, are all language models. I’m not sure there is an explicit definition of when a language model becomes a Large Language Model. However, the term LLM has been popularised by these biggest AI models the world has seen. In my eyes an LLM becomes a large language model if it becomes difficult to run on a single GPU – in terms of parameters, I’d say a model larger than 40B is large.

Opinion Incoming, smaller models (SLM) need to be the way of the future

In contrast to an LLM, you may also start to hear the phrase Small Language Model (SLM). There have been criticisms over the ethos of improving the capability by ‘just making the model bigger’, which has been what OpenAI and many others are doing with their LLMs. A new avenue of research is focused on ways to make similarity capable models, in a much smaller size. I have been following the work of Microsoft on their Phi Series, since attending a talk by Sebastian Bubeck at NVidia-GTC 2024. Microsoft are using a more conscious choice of training data, and a smaller model architecture to train capable language models. Surely the future has a more efficient, more targeted and conscious development of task-specific models, as opposed to a very large very general model.

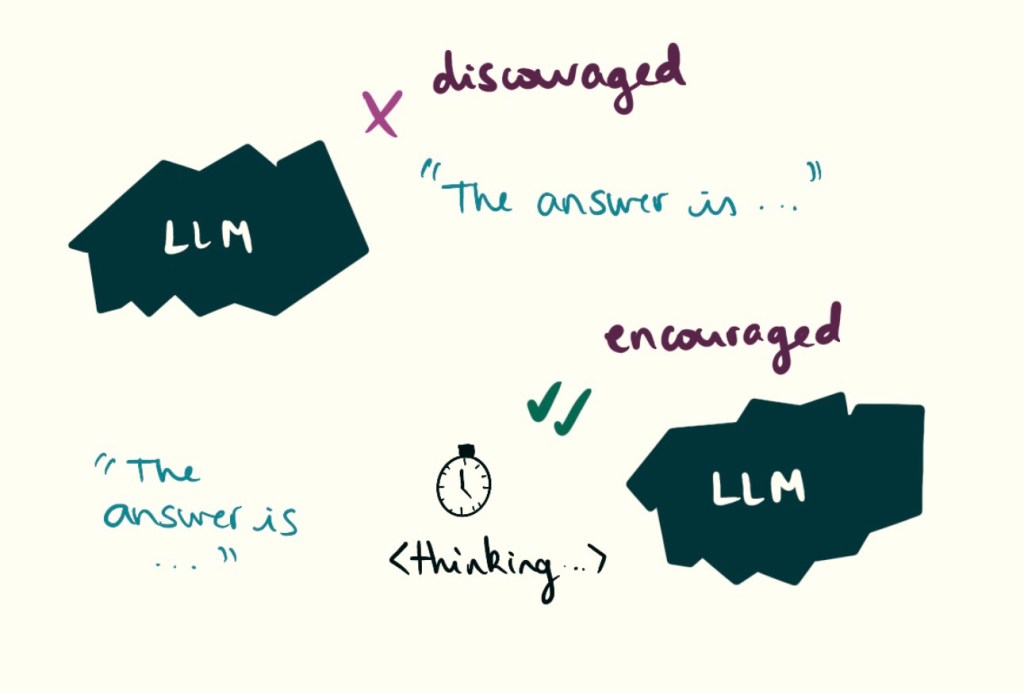

Reasoning Models

The AI community loves to create new words for existing concepts. Reasoning models are essentially Large Language Models which have been specifically adapted to encourage self-reflection and thinking. An LLM is created, and then Reinforcement Learning is used to encourage the LLM (with a positive reward) to spend more time thinking before making a final answer. That is all!

Model Distillation is another popular term at the moment. Model distillation involves using a large and highly capable model to transfer its knowledge into a much smaller model. This has been particularly effective with reasoning models, where the reasoning steps from a large model are collected and used to train a smaller model

Emergence and Emergent Behaviour

The term ’emergence’ has emerged alongside the rapid development of Large Language Models. The models are getting better at a much larger range of tasks, and tasks that it was not necessarily trained to be good at. Emergence is the idea that given a highly capable model, trained on several varying tasks – that same model can also be effective at new tasks.

Agents

An agent is a type of AI, which not only makes decisions but will execute actions based upon that decision. As opposed to just a decision-making tool, an agent will affect the real world. For example, navigating along a track, or executing bits of code.

It has been previously linked heavily with Reinforcement Learning. In this field, the RL AI model is often also called an agent. However, an agent may be powered by other techniques. Agents can be helpful in robotics to increase the autonomy of robots. Agents can also be helpful outside of robotics, interacting with humans via natural language and controlling external tools like databases. This will be the focus of my next post, but agents are rumoured to be the AI topic of the year for 2025. I believe the rumours are related to using LLMs in agentic ways – to support personal productivity. But I will do my research and explain fully in a post!

Conclusions

I hope this post has given you a quick introduction to the main terms that are used by the AI community. It is a complex and vast landscape, and this is by no means an exhaustive list. I have included the most relevant terms. As you’ll probably experience, new terms are brought out all the time – by next week there will probably be a new word for a breakthrough. Hopefully, these will give you a good foundation to understand exactly what is being discussed in the news!

Leave a comment