The AI Agent is predicted to dominate the AI news of 2025. Whilst previous years have seen a boom in LLMs, and their ever-improving capabilities, this year people are expecting the Agents to boom as a result of these LLMs.

In this post, we shall take a journey through AI Agents, and figure out what this term means! If it’s the topic of the year, then we should probably learn a little more. By the end of this post, you should have a good idea of the different types of AI agents and have a few examples of each.

See my previous blog – Decoding AI, for a reminder of the meaning of the key terms.

AI Agents Operate In The World

What if AI could do stuff for you, in the world, without your oversight? Well, this is why AI Agents exist! They are thought to be the topic of the year as the world seeks to boost productivity and the impact of AI on society.

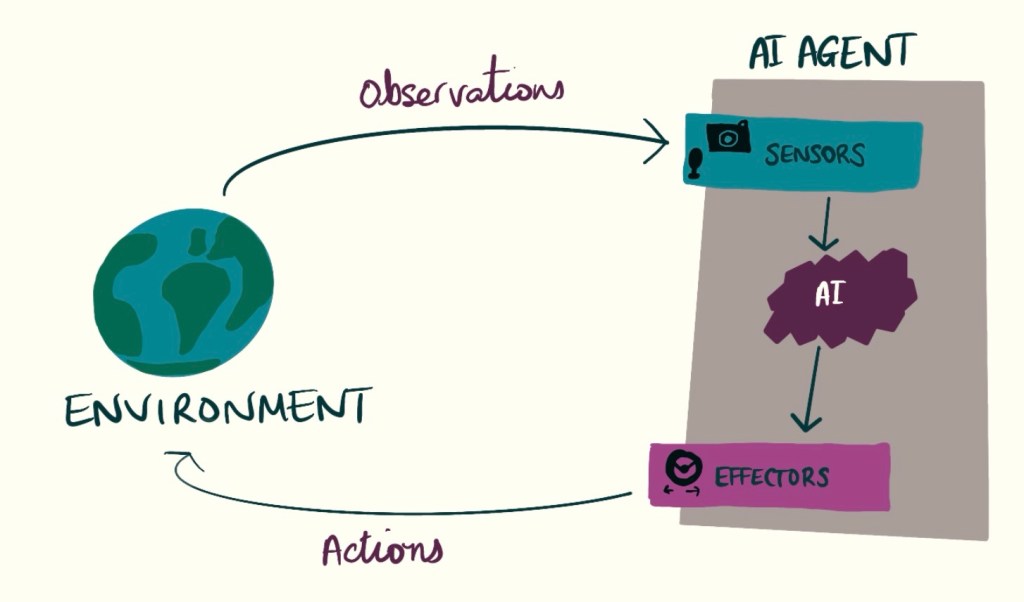

An AI Agent is a type of Artificial Intelligence, which senses the environment using sensors and responds to the environment with an action using an effector. AI Agents are designed to sense, decide and act continuously, for a given task, without human supervision.

The idea is agents could replace humans for certain tasks – to aid productivity, reduce human workload or even remove humans from dangerous situations.

But what does sensing and acting actually mean?

Sensing the environment is the live collection of data from the environment at that exact moment (not a dataset). For example, a microphone collecting sound, a user chat for text, or a camera for imagery.

Responding, or acting, in an environment, means that the agent does something based on a decision it has made. It could be turning right at a junction, or sending emails to the spam folder.

These things combine with a decision-making AI algorithm to form an Agent.

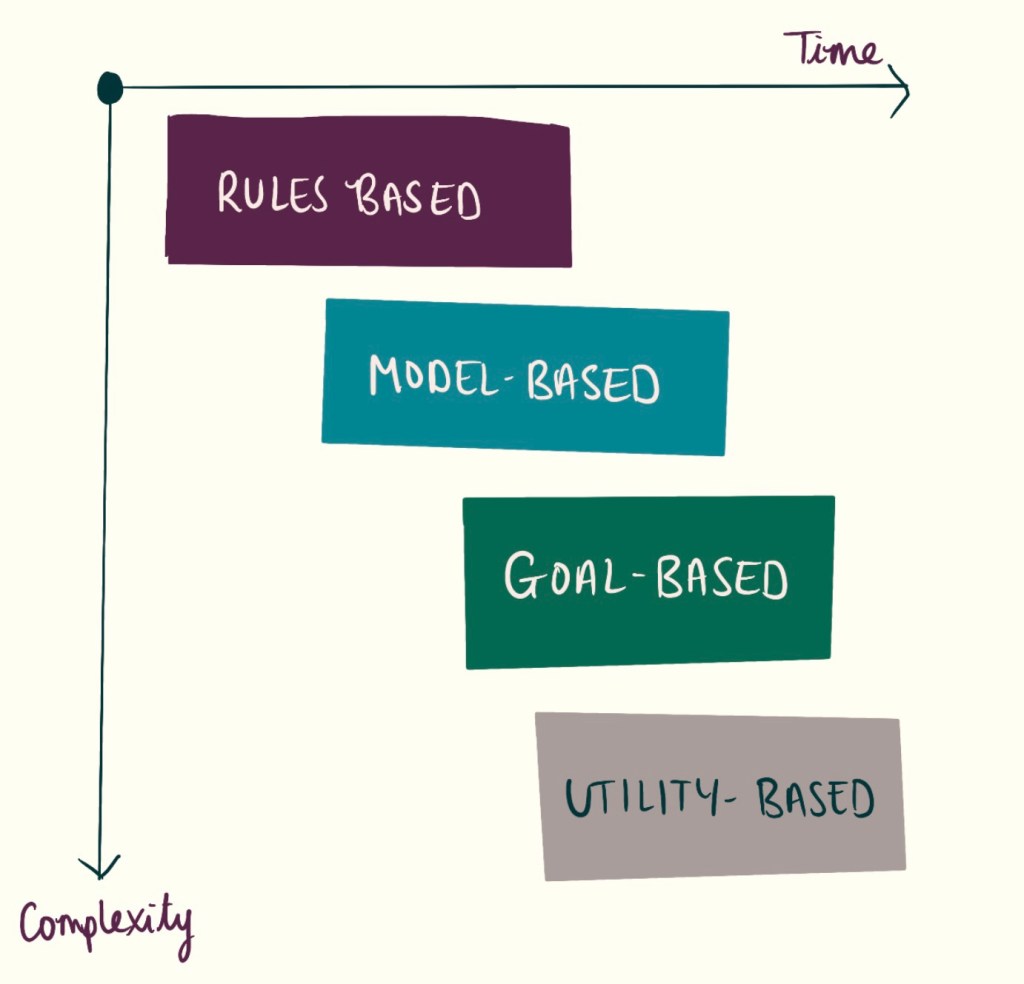

Agents Are Categorised Into Four Types

These definitions are a little abstract, and of course, nothing is quite as straightforward. The capability of an agent, including the actions it can take, the intelligence of the decision-making process and the data it senses all vary between agents!

Let’s go through some broad categories, starting from the most simple, and ending up with the most complex (roughly where the world is today).

If you are interested in learning more, a lot of this information originates from World Economic Forum Report on Agents.

Simple Rules-Based (Reflex) Agent

The simplest, and oldest type of AI Agent. This agent will accept simple data inputs and will have a limited number of actions. It has a hard-coded set of rules the agent should follow to determine the right action.

Moreover, the decision is made solely based on the current state – data comes in and only based on this it will decide which of its actions to execute.

“If you see a junction, then you should stop”

“If there is less than £100 in my bank account, then you should notify me”

These agents are heavily designed by humans, there is no learning or intelligence. A human designer comes up with the actions to take based on as many situations as they can imagine the agent will encounter. Often these are for simple tasks, with a limited number of scenarios and potential actions.

Rules based agents are explainable and reliable

Rules-based agents are awesome because they are inherently explainable. We can find out exactly why it took a certain action – it is written in its rule book. The rule book we created! They make great choices for situations we can predict, with simple data inputs and we have easy-to-define outcomes.

Rules based agents are not flexible

They are less awesome since they can only act on scenarios that we considered at design time. The agent will not be able to act in new scenarios. This makes them much less suitable for complex applications or complex sensor inputs.

Examples: Email Spam-Filter, Thermostat

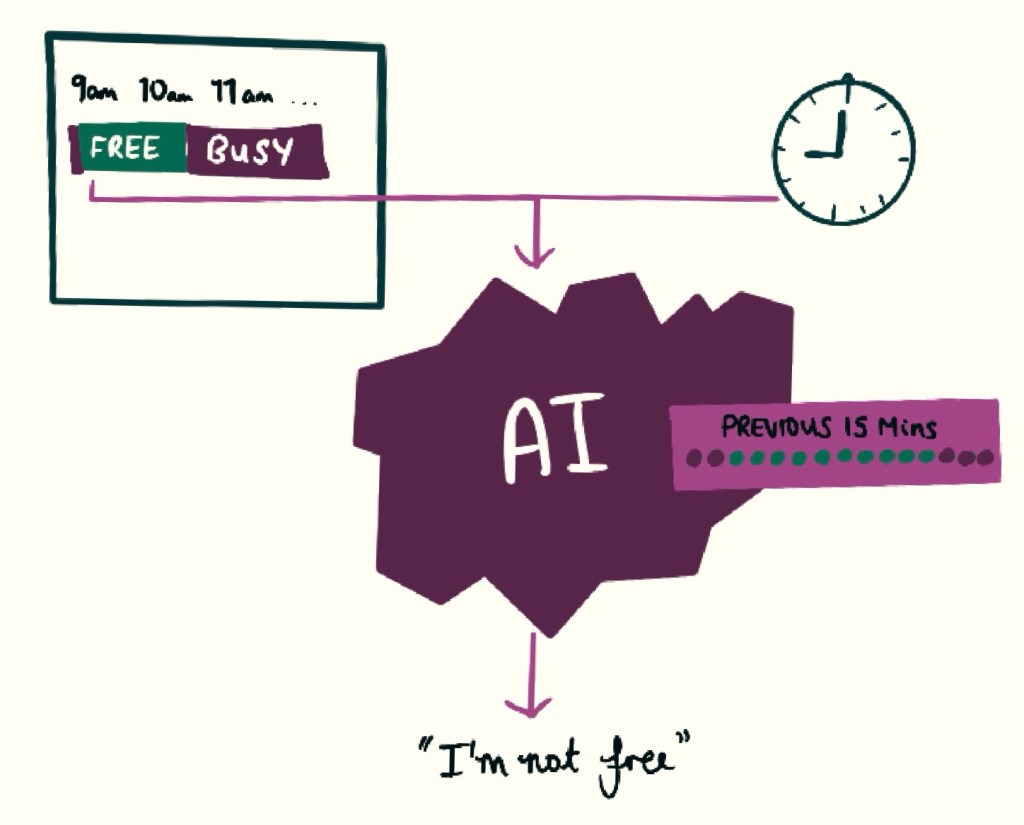

Let’s create a personal assistant agent who helps you organise your calendar. This will be a simple reflex agent which can help book appointments. Let’s design this agent’s sensor to read the current date, time and the corresponding status of my calendar – Free or Busy. The best I can make my agent do is to allow someone to book an appointment at the current time if I am Free, and decline if I am Busy.

Model Based Reflex Agent

Building on top of the simple agents, we maybe should build something that can keep track of previous states.

Model based agents keep track of the world

The model-based reflex agents have more intelligence. They have an internal world model, which allows them to somewhat store information about past experiences within their capacity. Every time it makes a new observation, its internal state model is updated, and then an action is made using both the internal state and the current observation.

These agents are also governed by rules, but unlike above – previous states are taken into consideration.

Examples: Smart Thermostats and Smart Robot vacuum cleaners.

Let’s build on top of my agent! This time my agent can know if I have had an appointment in the last 15 minutes. I usually like to have a break between appointments. On top of knowing the current date, time and calendar status will store my calendar status for the last 15 minutes. My new rules are, if I am currently free and I have been free for the last 15 minutes – then book the appointment. Otherwise, do not.

Goal Based Agent

We have considered the present, we have considered the past – what about the future? Goal-based agents do just that. What decision can I make, based on what I have seen, what I currently see and what my long-term goal is? The birth of Reinforcement Learning (we will describe this in another post).

Goal based agents learn to act in a way that achieves a defined goal

You define a goal, and some success criteria and create an environment for an agent to learn in (real or simulated). The agent will learn a series of actions to take in a wide range of scenarios to achieve its overarching goal.

Goal based agents can respond in unpredictable environments

Importantly these agents do not need a rule book writing, nor us to understand every possible scenario it might encounter. This means it can act in unseen scenarios.

Examples: Alpha Go, Tesla Autodrive

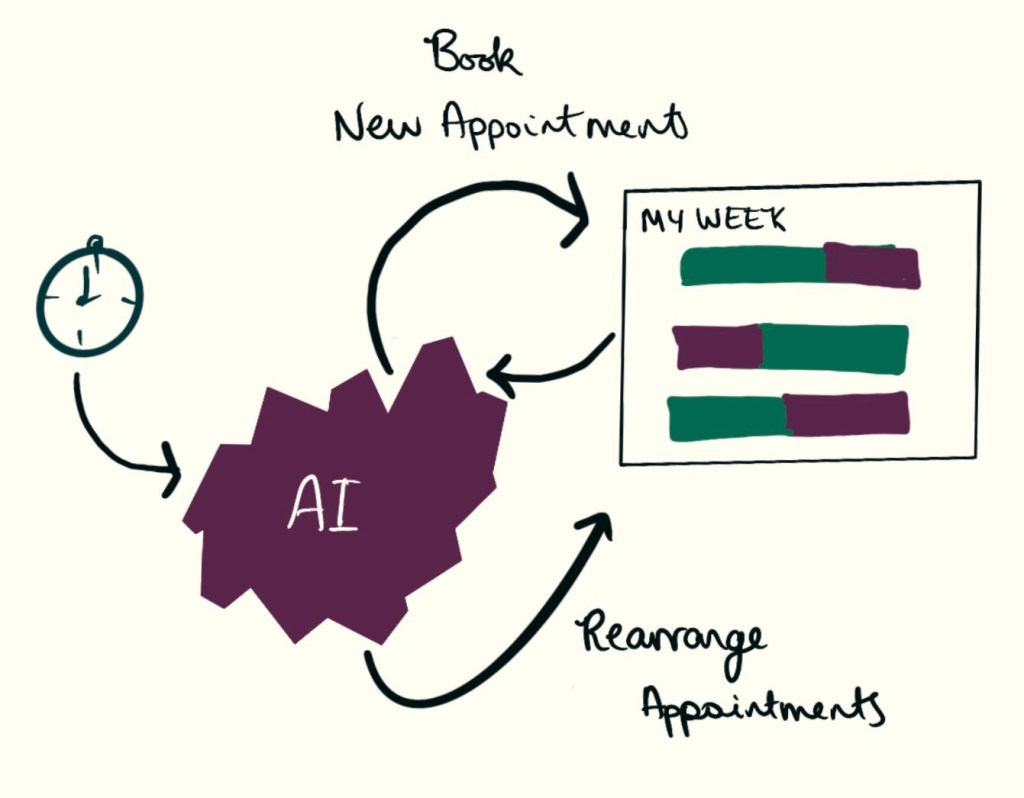

Right, our agent is getting better but ideally, I wouldn’t just say yes or no to customers but my agent could book them in for a future time. Also, I would like to understand more details about my previous appointments and to know what appointments I have in the future. And how to book them in so I don’t get overworked. Also, I work better in the mornings so I would prefer these slots. It’s all getting complicated, I don’t have the time to sit and write down every action to take based on every scenario. I think this time I will create an RL agent…

Utility Based Agents

In the current day, surely we can’t do better than the goal-based? Well yes because there may actually be several different ways of achieving the goal – but ideally we would aim for the best one. Utility agents do just that. These are built upon goal-based agents, ie they take actions to maximise the chance of reaching a goal. However, that also evaluates how good the outcomes are – and chooses the one that is the best.

Utility based agents learn to act in a way that achieves a goal whilst also maximising a utility score

You can specify not only the goals of the agent but also some qualities that make an outcome more or less preferred.

Goal based and utility based agents are not explainable

A word of caution, since it all sounds very positive. Like goal-based agents, since these agents use complicated, highly dimensional sensor data and can deal with so many different scenarios by learning appropriate responses, understanding why actions were taken is very challenging. If a disastrous action was taken, how do we find out the reason? And how could we prevent this from happening again? We can’t just look into the model to find out why – it’s a black meaningless box. This is why there is lots of caution in the community about how these agents are used, and why AI safety is a big topic right now.

Some days I’m having a bad day, and I actually would like to book my appointments more sporadically prioritising my time. But sometimes I’m having a great day and I’d like to book appointments to maximise my revenue. I‘d like to be able to choose a mode which better suits my mood.

Conclusions

So here we are, we’ve made it through the four main types of AI agents. As I hope you have seen, the term agent refers to such a wide range of concepts – some very simple, some very complex. At the end of the day, an agent reads data from the real world, then decides and takes an action based on what it has seen.

I will follow up soon with more detail on Reinforcement Learning and LLM Agents. These topics pretty much dominate all use of the word Agent at the moment – so they deserve a dedicated post.

Leave a comment