What is it and how do I use it?

Alexa, the at-home voice-enabled personal assistant, was built by Amazon and used by millions all over the world. Perhaps one of the most well-known voice AI assistants (somewhat competing of course with Siri, I always get the names mixed up). I use Alexa all the time. Hands-free voice control in your home is pretty useful. But have you ever wondered how it works? I have, and here I describe what I’ve found.

Alexa in my home

Firstly, how do I use Alexa? So I have an Amazon Echo Dot in my kitchen, we received this as a gift a few Christmas’ ago. And I pretty much only use Alexa for telling me the time, setting timers whilst I am cooking and most importantly for playing music – even if Alexa insists on using Amazon Music over my Spotify… It has been quite useful, and I have been interested in knowing how it works.

So How Does It Work?

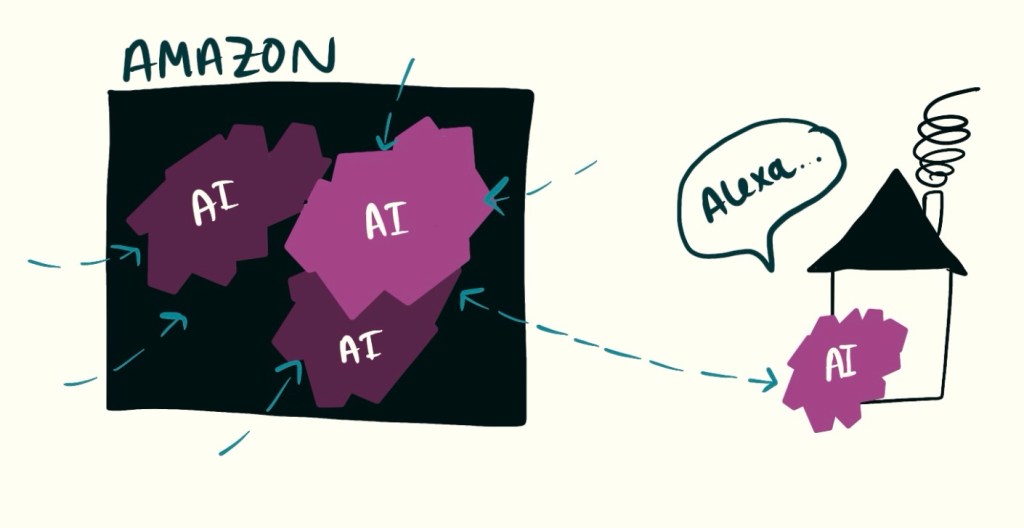

Alexa is an ASR system. ASR stands for Automatic Speech Recognition and is a type of Machine Learning which processes speech. The whole of Alexa is more than just the Echo Dot that lives in your kitchen. It involves that small box, but also a whole bunch of powerful computers that Amazon run and that are connected to your Echo Dot over the internet. This is why you might find your box can’t do much if you change the Wi-Fi.

ASR models come in many different forms; but are based on a Neural Network, trained using Deep Learning to convert audio (like spectrograms) into phonemes.

Alexa are you listening to me?

The Alexa which sits in your home uses a ‘wake-word’ detection algorithm to listen for the word ‘Alexa’. This means it will listen continuously for sounds that sound like the word “Alexa”. Which is why some similar-sounding words like “Lexus” will trigger it. Alexa has a Machine-Learning model running specifically listening out for this wake-word. This part of the system needs to be running all the time, available to respond at any point you say “Alexa”.

What does this mean? Well this bit of the AI needs to be fast – you don’t want a delay after you say “Alexa” before it starts listening. Secondly, it is on all the time, so is processing data ALL THE TIME. This part of the system needs to be low-powered, otherwise, the energy bill will be huge and no one will use it!

Wake word detection is a special type of ASR, which is specifically built for detecting voice at low power and with fast response. This part of Alexa is what is running on the small box you have in your home.

Top tip, you can tell when Alexa is listening (ie you have said the wake word) when you can see the light on the box or if it has made a noise.

Alexa do what I tell you!

Once Alexa is woken up, the next part of the AI is triggered. Running at the heart of Alexa is a voice control AI system. This is the clever bit. Firstly your speech is transformed into text. This part of the AI means Alexa can capture what you are saying in a much nicer format (words are way easier for a computer than audio files).

Unlike the wake word detection, this ASR has a harder role – it needs to accurately transcribe your commands; BUT it won’t be running all the time – only when you have asked. This model must be more capable and can run at a higher power. It also must be fast and accurate. From what I have read, this part of Alexa is run in the Amazon cloud. The small Alexa in your home will speak to a bigger, more intelligent Alexa running in Amazon data centres (Alexa Voice Services).

Running in the cloud means that Amazon, have their own very capable computer infrastructure running the AI software. Your at-home device connects to the cloud hardware over the internet. Sort of like how you access YouTube videos. They aren’t downloaded to your computer! You, at home, do not need to worry about managing these computers or paying their bills. Amazon handles all of this!

What do you mean you don’t understand?

The next component is an NLU (natural language understanding) block which turns what you say, into a command that Alexa can understand. Think of how many different ways there are of saying “Alexa, can you play Taylor Swift?”. This block is essentially a translator for all the things we might say, into commands that Alexa can understand. It is powered by an LLM Language Model. This also can help correct any mistakes in the transcribed speech or unclear things we have said.

Alexa thank you for doing that

Now Alexa has the right commands in a language it knows, it can act on those commands. This is the selling point of Alexa – it does stuff for you. You ask it to play a song, and Alexa will then play a song. You ask it to turn up the heating, and Alexa will tell your thermostat to turn on the heating.

This combination of ASR and action is what makes Alexa an AI Agent, over just an AI. We can control the environment using our voice and an AI system acts on our behalf. If you want to hear more about AI Agents, have a read of my previous post here.

Amazon uses APIs to allow Alexa to integrate with third-party apps. Like Spotify, Hive, JustEat etc. APIs are bits of software that enable programs, made by different people, to talk to each other. The Alexa commands from the NLU block get translated into API calls – instructing other programs, such as Spotify.

Alexa speaks back

Then, in the final step, Alexa speaks back to you – it can turn its replies into speech, “Now Playing Taylor Swift on Spotify”. This is Text-to-Speech. Text-to-speech is improving quite a lot, slowly we are leaving behind the pretty robotic-sounding voices of the original voice assistants and moving to very realistic human-sounding voices. It’s quite impressive!

Alexa 2.0 Updates

What great timing to be preparing a post all about Alexa. During the research, I found out that Amazon is going to be revamping Alexa and announcing it at the end of February! So here’s some extra information about how Alexa might be evolving (that is if you are a Prime member or want to pay ££ for the privilege).

What’s new?

Well as all other things in life these days, the new Alexa is powered by an LLM. This should mean it has a greater ability to understand more complex requests, and perhaps feel more natural or human in its responses. Essentially NLU and the action-taking part I described above will get better.

It also has ‘agentic behaviours’ (I’m not sure how I feel about this term) which supposedly allows it to search the internet more intelligently. So perhaps it might become helpful at answering things you would normally just type into Google? Instead of course just turning straight to Siri, ChatGPT or Google which already have this capability? You can read more here – Amazon Alexa Release.

I’ve personally never really found these voice assistant tools that natural to use, but I have been a user of Alexa – probably because of its convenience. Amazon has a slight advantage over the others, with the popularity of Alexa before the LLM boom. So I can anticipate turning to Alexa before Siri for asking general knowledge questions – especially as I very rarely have my phone on me when I’m in my house.

For those who are interested in the LLM powering the new Alexa, it sounds like they have a bunch to choose from. Amazon has partnered with Anthropic, so I imagine Claude will be included somewhere. For comparison, Apple has teamed up with OpenAI – with ChatGPT powering some of the Apple Intelligence features.

There we have it

I hope this has uncovered a little bit about what Alexa is and how it works. It’s all just Machine Learning and big vectors of lots of numbers – some numbers running in your home, other numbers running on the much more powerful Amazon computers.

I shall be going away and testing out the new Alexa upgrade. I suggest you all do it, if AI is entering our lives we might as well try and find out how it will work for us.

Leave a comment