GPUs (graphical processing units) are one of the most important hardware innovations for AI. Without the GPU we would never have reached the level of intelligence that we have today. It wouldn’t have mattered how clever the algorithms are, the hardware is equally important. But what is a GPU?

In this post I will walk you through the main elements of a computer, what they are for and then we shall dive into the GPU. I will help you understand why a GPU is necessary for AI and shine a light on why they dominate the computing stock market.

If you want to skip over all of this, just remember the GPU is vital in fast, parallel computation on large volumes of data (all the things we need for AI).

If you know nothing about computers, I’ll quickly introduce some jargon I use

Data Processing: I will say that a computer processes data, and what this means is the computer does something. Like writing to a word document, or edits an image. Basically everything you do on the computer requires some sort of data processing. It’s a painfully broad term, and the complexity of the data processing changes depending on the task at hand!

Computer Operation: I might say that a computer performs an operation, and what I mean is the specific function the computer does to some data. This could be adding two numbers together.

Matrix (Matrices): Think back to high school maths, you have probably come across this before. A matrix is such a core concept in computer science. It is just a large table of numbers. It has rows and columns (and if you really want to stretch your imagination, a matrix can have a third, or fourth, or Nth dimension). Matrices are used to hold data in a structured way, you don’t lose information such as position or ‘neighbourhood’. I think the best way of picturing a matrix is by thinking about a digitised image. The matrix data are the pixels, and the row and column of the data in the matrix correspond to the location in the image. Easy right!

High Performance Computing: This is basically a term used to cover those computers that are specifically build for processing huge amount of data, in specialist ways. This may be for research projects. Just know those big tech companies doing all this innovation are using bog-standard laptops or desktops. They will have very expensive, very large high performance computing warehouses.

Parallel Computation: This is the computer doing lots of operations all at the same time!

Your Computer, Explained

Forget about HPC or the cloud, let’s start with your average computer. Have you ever thought about what is inside your computer? Maybe you have never cared, but there are actually several key components that make your computer work. Why not spend a few minutes learning?

Picture your bog standard laptop. Turn it upside and imagine you take the casing off to expose the inside of the laptop. There are a couple of things you will see (yes they will all just look like bits of tech).

Motherboard houses all the parts

Firstly everything is attached to a motherboard. There’s not much more to say, other than this is what connects it all up.

The CPU is the brain of the computer, it is what makes it all run.

Next up is the CPU. The CPU (central processing unit) is the heart of the computer, and is the reason why it functions. When someone says computer chip – they probably mean the CPU. The CPU is very good at quickly processing data and in lots of different ways. They are responsible for so much of the computers operation.

The famous tech company Intel are a great example of a CPU developer. You might find that your laptop uses an Intel chip – normally seen with a little stick on the casing.

You can think of the CPU as your friend who is just annoyingly good at everything. No matter what you ask them to do, they can do it and they can do it quickly. You give this person, ie the CPU some data, tell them how to process it, and they will return the answer. Without it, well the computer wouldn’t do much!

When choosing a new computer or laptop you might come across the term CPU core. This essentially describes the capability of a chip. The higher the number of CPU cores, the better your computer will be at multitasking. A powerful laptop might have 8 or more cores, a general web browsing only laptop might have 4.

RAM holds data in quick to access memory.

There are a couple of ways of storing data, long-term and short-term storage. Let’s start with short term storage.

RAM (Random Access Memory) is the bit of the computer that is responsible for holding data that is about to be processed. Unlike other data storage, RAM can read and write data quickly – making it an ideal companion for fast data processing. The RAM holds data that the CPU needs to complete the desired task.

However, where RAM is really good at passing data quickly, it is less good at ensuring all the data is kept safe. You wouldn’t want to store your files in RAM – there is a greater risk that they will be lost!

You can imagine that RAM is the messenger, needing to deliver messages from one person to another (the CPU). This person is not allowed to write anything down, they have to remember it all in their head. The better the memory of the messenger, the more information that can be processed by the CPU. Hence the quicker the computer!

RAM is measured in GB, and the higher the GB the more data the computer can hold in memory at a given time – hence is the better processing ability your computer has. If you needed to do processing on large files, then you will need a higher RAM.

SSD stores data, protecting it from getting lost.

The SSD (solid state disk) is a type of long term data storage, ie where the data is kept when it is not in use. There are other types of long term data storage, but an SSD is the most common. Writing and reading from the SSD is slower than from RAM, but the stored data (data at rest) is much more protected against data loss than it would be if kept in RAM.

SSD is your diary, you keep all your plans and memories in here. Most of the time you don’t need to have quick access to- and you don’t want to lose the memories if you tried to remember them all. You instead write these things into your diary to recall back later.

SSD is also measured in GB, and the higher the GB the more files and data you can store on your computer. For modern laptops, 1TB is probably a good number to aim for!

Graphics Card and the GPU supports

Finally, I will mention the graphics card. The term graphics card and GPU do get used interchangeably, but they are actually slightly different (more on this later). Like the CPU the GPU processes data. The GPU is just specifically designed to process lots of data at the same time, but in one way. It is less generalised than the CPU.

Now you can think of the GPU as your favourite team who is awesome at one thing (and only one thing) and they can do it super fast. Way faster than your all-rounder friend, the CPU. But they are only fast at this one task.

All of these components are important to our AI world, but the GPU is definitely the most important. We will dive more into the history of the GPU and explain how it is different to the CPU. Hopefully you will get a sense of why the price and importance of GPUs in AI are a huge topic.

The Graphical Processing Unit, Not Just for Graphics

The GPU has a history in computer graphics, originally built by Nvidia, to enable high quality computer graphics. Their purpose has broadened since, and are now central to all high performance computing.

The GPU has a history in Computer Graphics and Gaming

They were originally invented to improve computer graphics. Think of how your computer screen renders images. Hence the name graphics card. Computer graphics require fast processing on large matrices. Computer Scientists wanted to be able to quickly render images for a better visual display, and designed a new way of processing large matrices. Here the GPU/graphics card was born.

The GPU speeds up large matrix processing by running the operations in parallel.

Matrices can be manipulated in parallel

Matrices can be split up, so that sections of the data can be computed, and then the answers can be recombined to result in the same answer. This is a hugely powerful result for computer graphics and AI computing.

There are lots of different types of GPU, it can be complicated.

The terminology can get confusing. You’ll probably hear the word graphics card when you are looking for a new laptops. A GPU is in a graphics card, but the graphics card contains additional components (such as dedicated memory and storage).

Integrated GPUs are what you’ll find most of the time. They come with their own RAM. Dedicated GPUs come without all the additional components, and need integrating with specialist computers

CPUs vs GPUs: What’s the difference?

Still don’t understand the difference between the CPU? Why can’t we just make a better, and faster CPU?

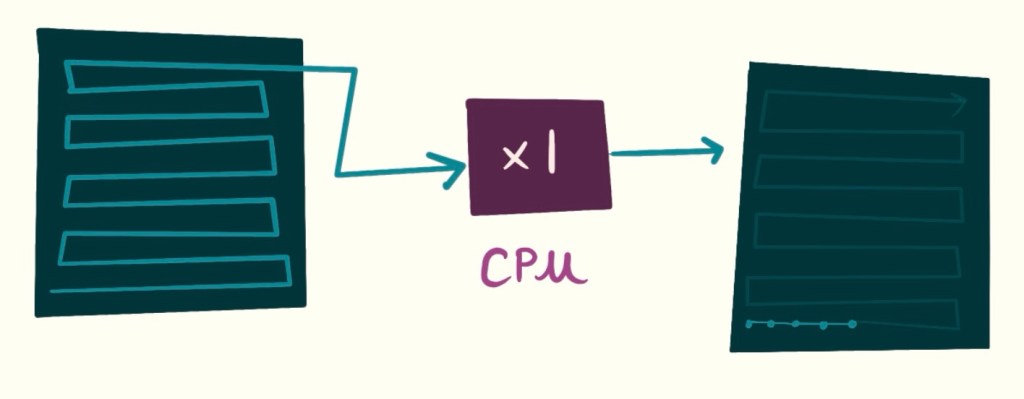

The CPU is very fast when comparing speed of one operation.

The CPU can do so many different operations, it is extremely general and can run these operations very quickly. One operation on a CPU is much faster than the equivalent operation on a GPU. However CPUs can only do one task at a time, so as soon as you need to process large quantities of data – a bottleneck appears and the whole process slows down.

As soon as the scale of the data increases, the GPU will overtake the performance of a CPU and will process data much faster.

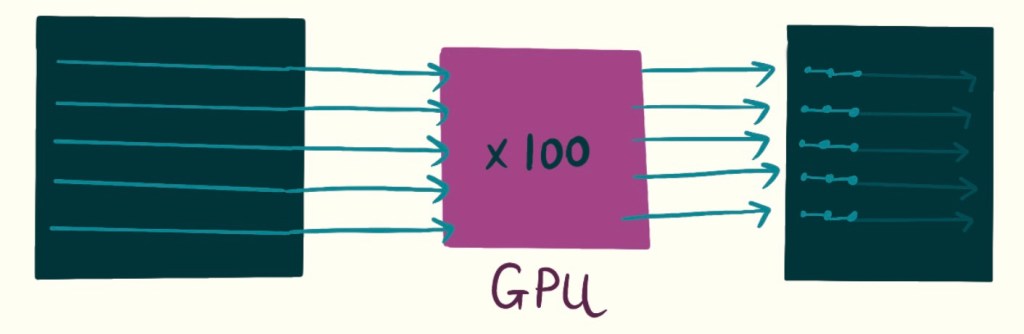

The GPU is very fast when comparing speed of thousands of operations.

In some tasks, like ML or computer graphics, the specific operations are actually quite simple. There isn’t a huge variety of complex functions – but the scale at which they need to be computed is enormous. This type of application can make use of parallel computing.

Matrices can be split up and these operations can be applied to smaller chunks of the matrix (such as the rows or columns) all at the same time, then combined at the end. This saves you waiting for one to finish before starting on the next. This is where GPUs come in, it processes lots of data at the same time.

Think of a supermarket till. Once extremely fast employee working on one checkout will still cause long queues at popular hours. But having a row of checkout tills serving people all at the same time will massively reduce the waiting times, even if one cashier is slower than others.

Both are important and both are used.

There is a place for both an effective CPU and effective GPU. We are never replacing the role of CPUs with expensive GPUs. Instead they will both exist in tandem (sometimes even combined – Apple!). Don’t forgot the role of a good CPU in AI development, often overlooked by the GPU.

AI Computation

AI models are build and run using GPUs. You can’t physically run ChatGPT without them (and not just one but 1000s of them).

Neural Networks are just really big matrices.

I won’t go into a full ML lesson here, but in case you didn’t know Neural Networks are essentially just big matrices. The model parameters (or weights and biases) are just matrices that are multiplied with input data to get a decision. Basically ML is just data processing using large matrices. Does this sound familiar? Think back to the computer graphics origins – essentially the same task right?

There are huge parallels between computer graphics and Machine Learning, no pun intended. Both applications need to quickly process large matrices. And the GPU was build to solve this. No wonder we are able to exploit the graphics card for improved AI computing. The GPU has become invaluable for AI – without this ability to process data in parallel, we would not have been able to reach this capability we have today. It is a hugely important innovation.

Why are they expensive?

So now you know that they are needed, but you might still be wondering why the Nvidia stock is going crazy, or why the price is skyrocketing.

GPUs (graphics cards) are more expensive than regular CPUs. Which maybe seems counterintuitive as the need for a CPU is at least that of than GPU. But there a a few possible causes for the massive discrepancy in price.

Firstly, GPUs are complicated to build

Building a GPU is more challenging. The system is more complex and the manufacturing requires specialist equipment and skills. This likely drives the price up.

Secondly, demand is going up.

The demand for AI and blockchain (also a GPU ally) has gone up. Perhaps due to all these amazing innovations, perhaps due to the openness and sharing of frontier AI technology, or perhaps it’s just hype? Whatever the cause, it is so clear that the demand for AI is increasing all over the world, in all applications.

Supply shortage still recovering from COVID-19

Paired with the high demand is the supply shortage during COVID-19. I’m not sure what was the cause of this shortage, but for a very long time you weren’t able to buy any new graphics card. There are lots of people who want new GPUs who are willing to pay high prices. This means the prices have been driven up and remained high.

Price tags includes Research and Development Investment

These companies (looking at you Nvidia) need to continue to innovate. They have large research teams, which themselves will not be making a profit (that’s research life). So the price tags of the GPUs probably includes cost of supporting these teams. You aren’t just paying for the product but you are investing in the development of the next generation chips. I won’t complain, I think this is necessary!

It is more than just hardware.

Yes this is also true for the CPU, but the GPU is more than just the hardware bits. There are lots of very complicated software packages and software drivers that also need building and maintaining. So your price includes these too. As a member of the AI research community, this is extremely helpful. These companies make some great software which enable researchers from all over the globe to innovate.

Conclusions

I hope this has helped you understand why GPUs are important in Machine Learning, and what they do. AI models are only getting bigger, so the level of computing power we need is only going to get more important. GPUs are incredibly invaluable and will continue to be.

Leave a comment