It’s a quiet Friday afternoon, I’ve had a busy working week, full of writing software, writing reports, reading reports – you know the drill. So, I thought it would be interesting to let the AI write something for me. As we are being encouraged to use these AI tools more and more, why not do a quick experiment? I just want to know if an AI could replace me! Would you consider using AI to help you create content?

I, like many people, sometimes have very busy weeks, or I want to write about complex topics and I just don’t know how to start. Using an AI to help me, could be a great super beneficial, but I don’t want using AI to make my content feel disjoint from my other work. In this post, I’m going to run a quick experiment. Let’s see what happens when I ask ChatGPT to write a blog post for me. I’ll analyse the differences between a post I have written and a post an LLM has written. We can look through the results together, and perhaps this will help you understand how you might leverage AI in your content creation. So let’s get started.

TLDR;

With an example of the style of content I wanted like to create, and a description of the topic that I wanted to write about, ChatGPT was able to create a convincing and pretty good post. There were some issues with American vs British spelling, and the examples it used were a little flat and used frequently in other content. But on the whole – it was convincing! Whether you believe in using these tools for improving productivity or whether you think they will be the killer of human creativity, they are getting pretty good and that can’t be ignored.

The Experiment

This is a very professional, very rigorous experiment, so let’s do what any good scientist should do and outline the experiment, to make this a somewhat fair, albeit short, experiment.

Hypothesis

ChatGPT can write content which passes for my writing.

Tools

We don’t need much, a blog topic and a method of writing the blog. I’m going to use ChatGPT, which is the GPT-4-turbo model (I’m using a free account). For the blog topic, I will describe what precision, recall and accuracy mean for ML model evaluation. Don’t worry if you have never heard of these words, you’re about to be told three different ways. I’ll keep it short!

Methodology

- I will write a very short blog, how I would normally (in a fraction of the time). No AI will be used – I promise, all my own words and some help from Wikipedia.

- Then I’ll go and ask ChatGPT (the free one!) to do the same thing. I’ll give it no other prompting other than “Please write a short blog post for an AI explainer website explaining precision, recall and accuracy”

- Then I’ll ask it again, in a new session, to do the same thing, but this time I will share one of my previous posts (like this one on AI agents) and ask it to write the post in the same style.

Metrics

Measuring whether two pieces of text are from the same author is both subjective and complicated to measure. This is a fundamental challenge with LLM Evaluation (which I will not dwell on here). So for this experiment, I will read and decide how similar they are to what I feel my writing style is – based on a previous post of mine. I will also leverage ChatGPT to decide how similar the three blog posts are to this same previous post. For each post, and each method of marking we will get a score out of 10.

Let’s see what it does! Can ChatGPT sound like me? And can we tell?

Post 1: I will write the post first

Everything below I have written, without AI aid.

Model Evaluation is the process of working out just how good our AI is at its task before we set it off into the wild. This is a really important step in the ML development cycle, but how do engineers measure their models?

In this post, I will explain the difference between accuracy, precision and recall. These are three of the most important terms used in AI evaluation, so I hope this post helps you understand what they mean.

Accuracy is a general purpose measure of model success

Let’s start at the beginning, with accuracy. This is a general-purpose means of measuring AI model performance, specifically when the model is doing a classification task. For those who are unfamiliar, classification tasks are tasks where a model needs to decide which label to assign something to. Like is this photo of a cat or dog?

Accuracy is basically out of all answers given by the model – how many were correct? You can think of accuracy as the way a teacher would mark a student’s exam – a % score based on the number of questions answered correctly.

Recall measures A models ability to Identify all examples of a class

Now accuracy is good until there is an imbalance in the dataset, at this point, the accuracy score might be misleading. Imagine if the test was full of really easy questions on one topic you are good at and contained only a few questions on a topic you found more challenging. You are lucky and your accuracy might be high, which is good for you in the short term, but it isn’t a good representation of your true ability. We need some additional metrics to help.

The recall score is defined to be the number of correctly classified examples of a class and over all examples of that class in the data. It measures whether a model is good at finding all the examples of a class. A high recall on class X means that my model is likely to find every example of X that I show it.

Back to the exam analogy, you might decide to calculate a recall score for each topic. The topic you struggle most with will be revealed by using this method – despite a high accuracy.

Precision measures how many times there are false alarms.

Precision is defined to be the number of correctly classified examples of a class, over the total number of predicted examples of that same class. This time we measure how many times the prediction is wrong.

Unlike recall, which calculates a model’s ability to find all examples (not caring whether it picks some which are not the class you are looking for), precision calculates how many of the predicted examples are correct. In essence, this measures a false positive rate of the model.

This is probably not my finest work, but gloss over what I’m saying, you’re about to read it two more times! I’ve tried sticking to my usual format – a longer subheading, a few metaphors and a friendly tone.

Post 2: Lightly Prompted ChatGPT Created Post

Let’s try the LLM, with only a little bit of guidance. Here we go – my prompt to ChatGPT is:

I’m writing a blog for an AI explainer website, which explains what accuracy recall and precision are. I don’t need titles – I just need an introduction and an explanation of accuracy recall and precision.

The AI generated blog is below.

When evaluating the performance of an AI model, especially in classification tasks, it’s important to understand key metrics that measure its effectiveness. Three fundamental metrics are accuracy, precision, and recall. Each provides a different perspective on how well the model is performing and is crucial for assessing its real-world applicability.

Accuracy measures the overall correctness of the model by calculating the proportion of correctly classified instances out of all instances. While accuracy is a useful metric when classes are balanced, it can be misleading in cases where one class is significantly more frequent than others. For example, if a model predicts whether an email is spam, and 95% of emails are not spam, a model that always predicts “not spam” will have 95% accuracy but be practically useless.

Precision focuses on the correctness of positive predictions. It is the ratio of true positive predictions to the total number of times the model predicted a positive outcome. High precision means that when the model predicts a positive class, it is usually correct. This is especially important in scenarios where false positives carry significant consequences, such as diagnosing a serious disease—incorrectly predicting a patient has the disease (false positive) could lead to unnecessary stress and treatments.

Recall (also known as sensitivity) measures the model’s ability to identify all actual positive cases. It is the ratio of true positive predictions to the total actual positives. High recall ensures that most of the actual positive instances are detected, which is critical in cases where missing a positive case is costly, such as detecting fraudulent transactions—failing to flag a fraudster could result in substantial financial losses.

Balancing precision and recall is often necessary, as improving one can reduce the other. The choice between them depends on the specific needs of the application, making it essential to consider both when evaluating an AI model’s effectiveness.

The content is correct and is clearly written. I do feel like it is missing something. There are no metaphors to help you understand, the text doesn’t feel like it is written to ‘you’ as a reader. Essentially feels like it is coming from a textbook.

Post 3: Let’s get the AI generate it in the style of me

In ChatGPT’s defence, my prompt was lightweight on details and I didn’t give it a helping hand for style and tone. So this time, I’m giving it a link to one of my blogs as a style inspiration. I will just add the same formatting I normally do – but I will not tweak the words.

I’m writing a blog for an AI explainer website, which explains what accuracy recall and precision are. I don’t need titles – I just need an introduction and an explanation of accuracy recall and precision. Could you copy the style of the post body from here: https://thealgorithmedit.com/2025/02/26/understanding-ai-agents-types-and-functions/

Everything below is written by AI:

In the realm of artificial intelligence and machine learning, evaluating the performance of models is crucial to ensure their effectiveness and reliability. Three fundamental metrics—accuracy, precision, and recall—serve as the cornerstone for this evaluation, each offering unique insights into a model’s performance.

Accuracy represents the proportion of correct predictions made by the model out of all predictions. It provides a general sense of the model’s overall performance. However, in datasets where classes are imbalanced, accuracy can be misleading. For instance, if 95% of emails are non-spam and 5% are spam, a model that always predicts “non-spam” would achieve 95% accuracy but fail to identify any spam emails.

Precision focuses on the quality of positive predictions. It is defined as the number of true positive predictions divided by the total number of positive predictions (true positives plus false positives). High precision indicates that when the model predicts a positive class, it is usually correct. This metric is particularly important in scenarios where false positives are costly. For example, in email spam detection, high precision ensures that legitimate emails are not mislabeled as spam.

Recall, also known as sensitivity, measures the model’s ability to identify all actual positive cases. It is calculated by dividing the number of true positive predictions by the total number of actual positives (true positives plus false negatives). High recall indicates that the model successfully captures most of the positive instances. This is crucial in situations where missing a positive case has serious consequences, such as in medical diagnoses where failing to detect a disease could be life-threatening.

Balancing precision and recall is often necessary, as improving one can lead to a decline in the other. Depending on the specific application and the associated costs of false positives and false negatives, one metric may be prioritized over the other to optimize the model’s performance effectively.

I can already see some mistakes! I use the British spelling – optimize, and prioritize are not how I would spell these words. I think perhaps it hasn’t read the article properly, I don’t feel like this is in my style at all.

Post 4: A final go, let’s copy in the inspiration directly.

I got a little worried that maybe ChatGPT has difficulty reading the text from the link. The website page has more than just the blog post text. So this final time I’m pasting some of the body of my previous article in, using the same prompt as above, but with the first three paragraphs of that link. Let’s see if it has better luck with the content embedding directly into the prompt.

AI models are often judged on how well they perform, but what does “perform well” actually mean? When evaluating an AI system—especially one that makes predictions, like a spam filter or a medical diagnosis tool—three key metrics are used: accuracy, recall, and precision. Understanding these metrics helps us grasp how reliable an AI system is and whether it’s suited for a particular task.

In this post, we’ll break down accuracy, recall, and precision in a way that makes sense. By the end, you’ll have a clear picture of what these terms mean and why they matter when assessing AI performance.

Accuracy Measures Overall Correctness

Accuracy tells us how often the model’s predictions are correct. It’s the proportion of all predictions—both positive and negative—that were classified correctly. While accuracy is a useful starting point, it can be misleading in cases where the dataset is imbalanced. For example, if a model predicts “not spam” 99% of the time in an email filter, but only 1% of emails are actually spam, the accuracy will be high, but the model will be practically useless.

Recall Captures How Much The Model Finds

Recall focuses on how well the model identifies all relevant instances. It’s the percentage of actual positives that were correctly classified. In a medical setting, recall would measure how many people with a disease were correctly diagnosed. A high-recall model minimizes false negatives, which is crucial when missing a case has serious consequences—like failing to detect cancer in a screening test.

Precision Ensures What’s Found Is Relevant

Precision, on the other hand, tells us how many of the predicted positives were actually correct. It’s the percentage of positive predictions that were truly positive. A model with high precision minimizes false positives, which is essential in situations where incorrect predictions have costly consequences—such as wrongly accusing someone of fraud.

Balancing these metrics is key because improving one often comes at the cost of another. Depending on the application, some trade-offs are acceptable, while others aren’t. A spam filter, for example, might favor precision to avoid blocking important emails, while a medical test might prioritize recall to ensure no cases are missed. Understanding these metrics helps in designing AI systems that work effectively in the real world.

That last one was way better right? The introduction was much more my style, with questions, directly talking to the reader, and outlining what is in this post. There are some examples, but it is light on metaphors still. Yes, I can still see the American spellings!

What am I looking for?

When reading this text, I’m going to bear the following things in mind. These are some tricks I’ve picked up along the way which help determine if the content is AI-written or not.

That’s just plain wrong, AI Can Hallucinate Facts

Are there errors – factual or grammatical? AI does tend to make up facts, which sound plausible (known as hallucination). Humans are much more likely to make grammatical errors or spelling errors.

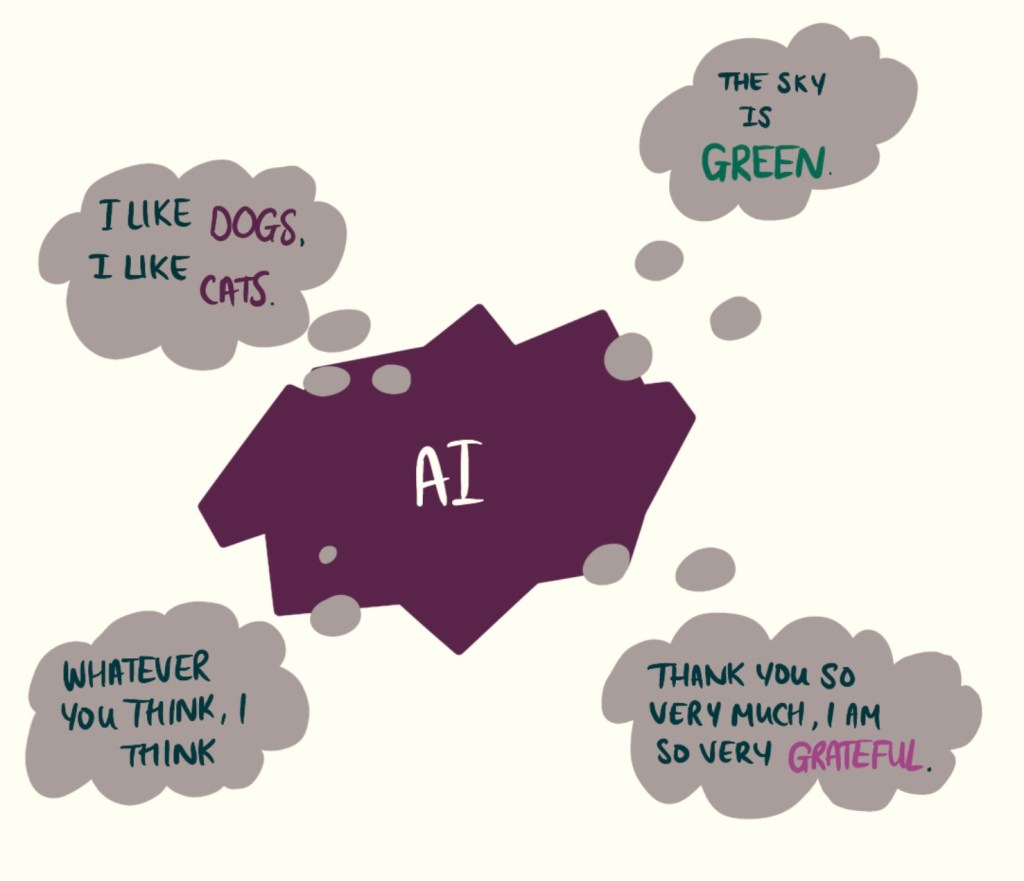

What a pleasant AI, the AI will aim to please with politeness

Is the tone of the text too polite or formal? Many language models have been specifically created to match with human preferences (known as human alignment), so often respond in a very polite tone. Human creators are more likely to have an inconsistent tone, especially in longer, unedited texts.

You’re giving my mixed massages, what do you actually believe?

Does the text avoid overly strong opinions? Linking back to human alignment, AI tends to avoid strong opinions. This reflects what most of us prefer in a conversation partner. We, the humans, are often not afraid to voice our opinions (wanted or not)!

I’ve heard this all before, the AI has no new opinions!

Are there any unique viewpoints, or are they just summarised facts? A fundamental weakness of AI (at least the AI of today) is that it can’t innovate new viewpoints, it just has an incredible ability to file away all the opinions it has read during its creation and summarise them when prompted.

Findings

So let’s see what the two evaluations resulted in, the higher the score – the more the content sounded like my content.

| Test Blog Post | [Scores] Comments (Me/ AI) |

|---|---|

| Take 1 (My Writing) | [10 / 10] My writing, so you know I can’t comment. [8 / 10] GPT had a few things to say though – The post effectively explains key AI evaluation metrics—accuracy, precision, and recall—in a clear and accessible manner. The tone is informative and engaging, with relatable analogies. Minor improvements could be made in capitalization consistency (e.g., “A models” → “a model’s”) and terminology uniformity. |

| Take 2 (Lightly Prompted) | [4 / 10] I thought this was too formal, not engaging with the reader at all, though the technical detail was clear and correct. [9 / 10] AI thought this was well-structured and precise, with clear explanations and the tone aligns well with The Algorithm Edit. It is slightly more formal than the reference post, lacking some of the conversational elements. |

| Take 3 (Linked Blog in Prompt) | [6 / 10] Again I thought the was too formal, but had clear explanations with examples. There were some American spellings. The structure didn’t quite match (subheadings aren’t there) and doesn’t engage directly with the reader. [9 / 10] A technically accurate and well-organized post that matches the target audience. The explanations and examples are strong, though the style leans slightly more formal than the reference article. |

| Take 4 (Pasted Blog in Prompt) | [9 / 10] I thought this was very close to my style, with the introduction, questions, talking to the reader directly, examples and the subheadings as sentences. Again minus a point for American spellings. [9.5 / 10] Engaging, structured, and highly readable. The conversational yet informative tone, use of rhetorical questions, and bold headings improve clarity. Slightly more casual than the reference post, but this enhances readability rather than detracting from it. |

The AI and I only agreed on one post (out of FOUR)

So ChatGPT didn’t like my post, which is understandable – I am a human after all! But what is interesting is we both agreed that the final (Take 4) post strongly aligned with my style, whereas the others felt more formal and textbook-like. Re-read Posts 2 and 3 and see that the text never interacts with you, the reader in any way.

Example Content Helps with Style Matching

You can see the improvements each time we used this LLM to generate content for me. Giving an example of the style to the model has helped it match the fit of the content that I usually write. It uses things like rhetorical questions:

AI models are often judged on how well they perform, but what does “perform well” actually mean? (Post 4)

It included subheadings of short sentences, which is something I frequently use:

Accuracy Measures Overall Correctness (Post 4)

However, it still used American spelling! It didn’t quite pick up on that.

… medical test might prioritize recall…

The AI insn’t Inventive when it comes to examples

It’s funny that three different AI-generated posts use the same two illustrative examples to help explain the differences (medical diagnosis and email spam filter). I imagine these are probably used frequently to describe the differences over the internet.

Conclusions

After this experiment, I’ll be using AI to enhance my work, and wherever I do, I’ll be incorporating some of my other content to help it sound more natural. I’ve seen how well it can match style and tone with specific instructions. The area that I will still need to contribute, is providing examples, opinions and editing.

Some people might argue that AI will replace content creators, and yes, we’ve seen that the quality of AI-generated work can be pretty impressive. However, I believe that AI isn’t here to replace us. You’ll still need a human creator to plan the work, weave in personal experiences, and use helpful metaphors to add that special “flair” and authenticity.

So, what do you think? Can you spot any signs that AI might have written this text? Will you be using AI in your content creation, and if so, how? Drop a comment below!

Leave a comment